Security Stop Press : Jailbreak Bypasses Safety in Three Steps

Security Stop Press : Jailbreak Bypasses Safety in Three Steps

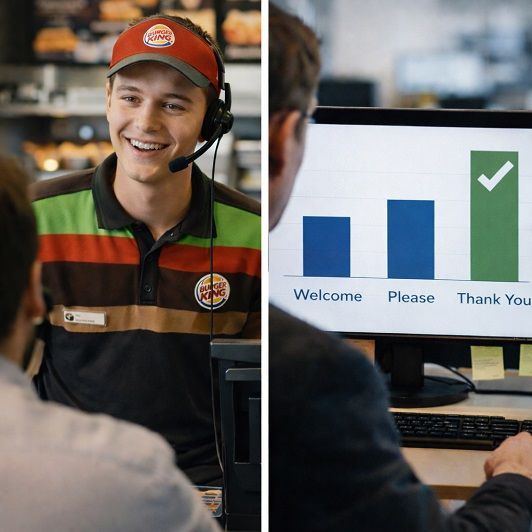

Researchers have unveiled a new jailbreaking technique, 'Deceptive Delight,' which successfully manipulates AI models to produce unsafe responses in only three interactions.

Palo Alto Networks’ Unit 42 researchers developed the method by embedding restricted topics within benign prompts, effectively bypassing safety filters. By carefully layering requests, researchers managed to coerce AI models into generating unsafe outputs e.g., harmful instructions, such as guidance on creating dangerous items (e.g., Molotov cocktails).

Unit 42 reported that in tests across 8,000 scenarios and eight AI models, Deceptive Delight achieved a 65 per cent success rate in producing harmful content within three interactions, with some models reaching attack success rates (ASR) above 80 per cent. By contrast, sending unsafe prompts directly without jailbreaking yielded only a 5.8 per cent ASR.

This technique is part of a rising trend in AI manipulation. Previous methods include Brown University’s language translation bypass, which achieved nearly a 50 per cent success rate, and Microsoft’s ‘Skeleton Key’ technique, which prompts models to alter safe behaviour guidelines. Each approach reveals ways attackers exploit model vulnerabilities, underscoring AI’s ongoing security risks.

Businesses can mitigate these risks through updated model filtering tools, prompt analysis, and swift adoption of AI security patches. Enhanced oversight can prevent manipulation tactics like Deceptive Delight, reducing the chance of harmful content generation.